Machine Learning: Creating an animated avatar that reacts to your voice

When you hear Machine Learning, then things always seem a little abstract right? How can machine learning be used practical in your life? This week I created an animated avatar based on my photo that was turned into an digital avatar with an amazing Python tool. How can we use a Python Machine Learning Tool to create an animated PNG-Tuber avatar.

A PNG-Tuber is a sub-form of a V-Tuber using an animated 2D image in opposite to a 3D model as their virtual counterfeit. The avatar is then used instead of a webcam. V-Tubing originated in Japan in 2010 but grew popularity in the western world in 2020. PNG-Tubing is a quick and easy entrance into V-Tubing.

Tools I used:

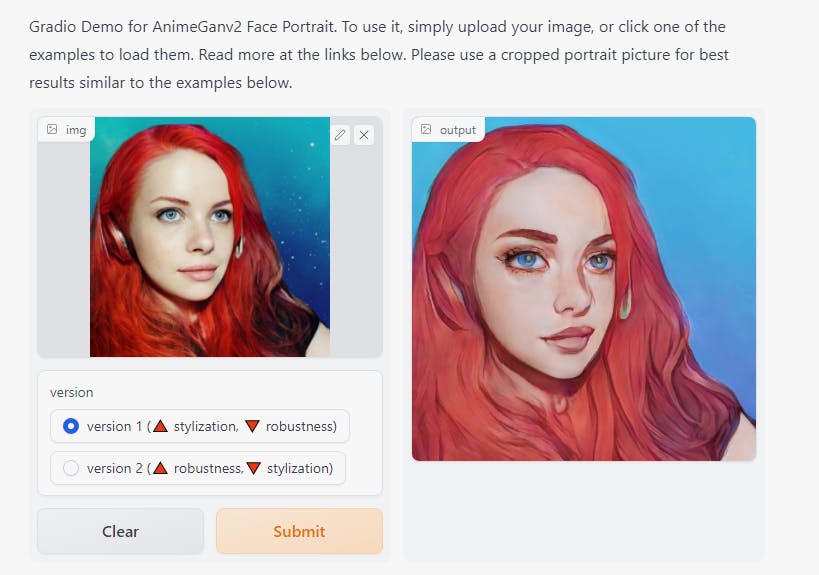

- To turn my photo into an comic avatar, I used AnimeGANv2. You can check it out on Huggingface.co

- The program used to animate your character and let's your voice control it, is Gazo Tuber

- To create the different "Scenes" of the animation, I used Krita and GIMP with my graphic tablet.

How to get started!

This is the picture I started with. It is a portrait of myself. I went to AnimeGANv2 and used the tool over there to create a comic version of this image. The reason why I didn't use my photo is, that it might make viewers and also me quite uneasy to look at an animated version of my own face.

This is the picture I started with. It is a portrait of myself. I went to AnimeGANv2 and used the tool over there to create a comic version of this image. The reason why I didn't use my photo is, that it might make viewers and also me quite uneasy to look at an animated version of my own face.

This is the result I got

Not terrible at all if you ask me Not only does it look like an actual comic face but it also fits the vibe i would like to go for with my animated avatar.

Not terrible at all if you ask me Not only does it look like an actual comic face but it also fits the vibe i would like to go for with my animated avatar.

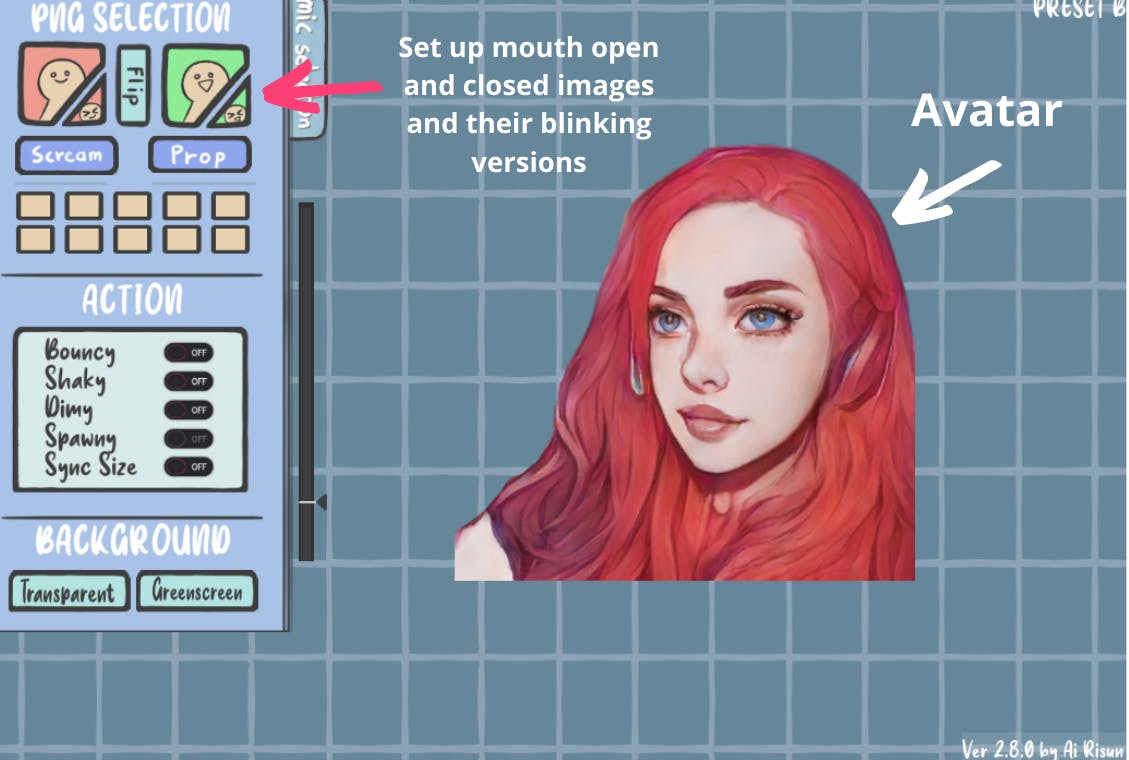

Setting up Gazo Tuber

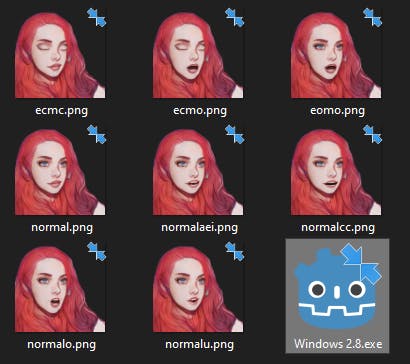

Gazo Tuber requires you to have at least 2 images - one with an open and one with a closed mouth - to indicate if you are speaking or not. If you want your avatar to look a little bit more alive, you should also upload a version with closed eyes for both, so the program can let your avatar close its eyes for blinking. I used Krita and my Graphic Tablet for this work. Since I already worked on photo editing and did my fair share of digital painting, this was quite a quick task for me.

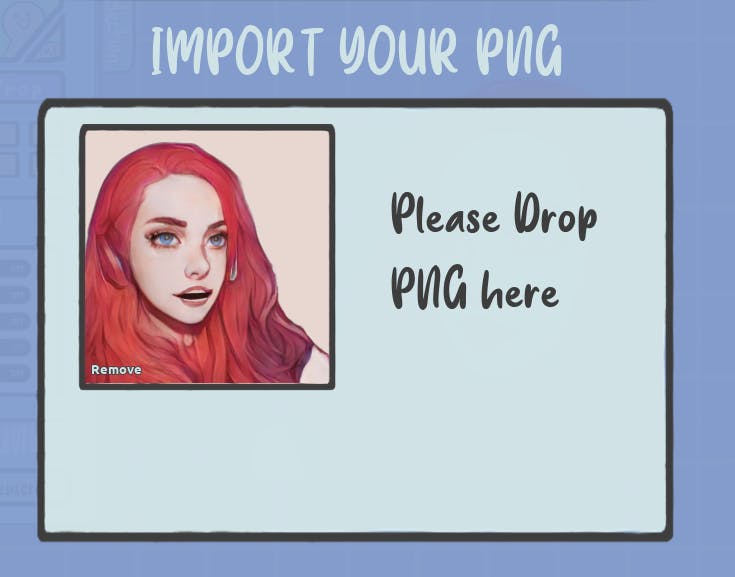

To get a more lively animation when you avatar is talking, you can add several different pngs with different shaped and opened mouth per drag and drop onto the importer

I created eight images in total that build the animation. I used reference templates for the different mouth shapes so I could create them from scratch and also made sure the jaw would move for the right vowels.

Once you have set up Gazo Tuber correctly and also connected your microphone, you can implement the avatar in your screen recording or streaming software as a new scene.

What I love about tech is that different skills combined can lead to the most creative solutions out there. I also think that art tools powered by AI don't replace artists but they will support artists in the future. When I learned to create references for artwork by printing images and then cut them and glue them together as a collage, artists in the future will have a tool to which they describe what the reference will look like.

If you want to create your own animated speaking avatar, just follow the links on top of the description!